[If USB2.0 is too boring, learn about the details of 50 different Thunderbolt-4 docks – yep you read that right, there are 50 different models – find out which ones are identical inside.]

USB 2.0 is over 20 years old, so why do modern USB-C and USB 3.x hubs still have 2.0 ports? Shouldn’t they all be USB 3.x by now?

Some folks would assume this is simply a cost saving measure given that it is cheaper to implement 2.0 vs 3.x as some devices like keyboards and mice can’t take advantage of USB 3 anyway. But it is a little more nuanced than that. Before diving into device teardowns during the last year, I had always thought that manufacturers selected cheap USB 3.x hub chipsets with fewer ports and supplemented them with even cheaper USB 2.0 hub chipsets to increase total port count. But having two chips is expensive. The real answer lies in the pinout of USB connectors. USB 3.x and USB-C have both super speed data lines (SSTX+/-, SSRX+/-, TX+/-, and RX+/-) and low speed data lines (D+/D-):

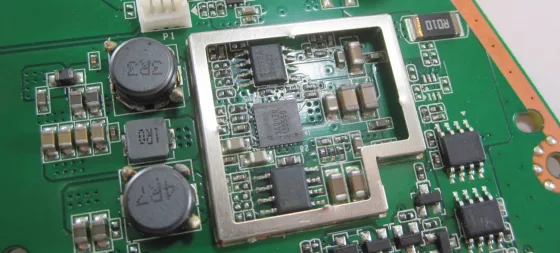

Physical 3.x ports have all the pins connected for backwards compatibility with USB 2.0 devices like mice/keyboards. But devices embedded inside a hub only need to connect to the pins they actually need. For example, SD card readers and Gigabit Ethernet chipsets often use the super speed SSTX/SSRX pins, but not the D+/D- pins. So what can we do with unused D+/D- pins?

Enter port splitting, port bifurcation, or USB link sharing.

According to Microchip, port splitting “allows for the USB3.1 Gen1 and USB 2.0 portions of downstream ports… to operate independently and enumerate two separate devices in parallel…”. Cypress documents a similar “shared link” scheme for some of their USB chipsets. Here is part of Microchip’s guidance on how to implement port splitting:

Essentially this is getting a USB 2.0 port for free. For example, a 4-way hub chipset can be converted into a 6-way by having two downstream USB 3.x ports, Ethernet, SD card, and two bonus USB 2.0 ports split off from the Ethernet and SD card. How’s that for cost savings? There are some caveats with this approach. The USB 3.x device must be embedded and not something that can be unplugged. The USB 2.0 device can either be embedded like an audio chipset, or an external port. Because of the way USB hubs are setup, if there is a fault on either the USB 3.x or USB 2.0 device, both may disconnect. So in this aspect, port splitting can be less reliable with problematic or non-compliant devices compared to truly distinct ports. Also full USB 2.0 backwards compatibility is broken.

Other USB hub chipsets from ViaTech, Genesas, etc. also support this feature but don’t provide full public documentation. But you can always tell when port splitting is in use because the total number of embedded devices plus downstream ports exceeds the hub chipset downstream port count. So a 6-port hub chipset might have a combined total of 8 downstream ports/devices. And who wouldn’t want an 8-in-1 instead of a 6-in-1 hub for less than $1 USD in extra parts?

References:

- Microchip USB5816 hub controller product guide

- Cypress CYUSB3326 datasheet page 6 (thanks to /u/hubsdocks for pointing this one out)

Neat. Thank you.

I don’t know if you accept reader questions, but on the chance the answer is yes, I have been curious to know what would practically happen (in terms of performance) if a manufacturer violates the Thunderbolt 3 spec and creates, say, a 2.5m or 3m Thunderbolt 3 cable, where the only difference from their current 2m active cable is length. Would some mysterious effect lead to the cable not working at all? Would the only perceptible difference be a few feet of extra cable, or would there be a significant performance impact? Are cables typically built to just meet spec, or are some overbuilt such that they can still perform when something isn’t quite right?

LikeLiked by 1 person

Hi – there are 2 likely side effects. First is with power delivery, a longer cable will have a larger voltage drop, may heat up a little more, and ultimately deliver less power to the laptop. Second is that with longer cables, high bandwidth communication is compromised. 40Gb/s may not be possible and the cable may just negotiate down to 20Gb/s or 10Gb/s. Its unlikely that the cable would not function at all more more likely that it would just sync down to a lower speed. Worst case is going back to USB 5Gb/s. I’m not sure how that would affect PCIe tunneling if you were using an external video card or something. Its plausible that the controller would just kick those devices off the bus but leave others.

My understanding is that the certified cables are built to meet spec. The spec already has some buffer room built in to accommodate for changing external interference.

LikeLiked by 1 person

Another reason is usb3 radio interference. A lot of radio dongles for wireless keyboards/mice don’t work very well when plugged into usb3 ports, and the only solution is to get a usb2 hub, plug them into it, and then plug the hub into a usb3 port. I guess having usb2 ports on a tb hub directly should help with the issue

LikeLike

There is only one reason: If they didn’t nobody would buy them except young tech enthusiasts who still haven’t been burned out by the upgrade treadmill.

LikeLike

Hey I still have a USB 1.1 hub from 1999 that works with my laptop from 2022. How’s that for getting off the treadmill?

LikeLike